Kubernetes agent targets are a mechanism for executing Kubernetes steps and monitoring application health from inside the target Kubernetes cluster, rather than via an external API connection.

Similar to the Octopus Tentacle, the Kubernetes agent is a small, lightweight application that is installed into the target Kubernetes cluster.

Benefits of the Kubernetes agent

The Kubernetes agent provides a number of improvements over the Kubernetes API target:

Polling communication

The agent uses the same polling communication protocol as Octopus Tentacle. It lets the agent initiate the connection from the cluster to Octopus Server, solving network access issues such as publicly addressable clusters.

In-cluster authentication

As the agent is already running inside the target cluster, Octopus Server no longer needs authentication credentials to the cluster to perform deployments. It can use the in-cluster authentication support of Kubernetes to run deployments using Kubernetes Service Accounts and Kubernetes RBAC local to the cluster.

Application monitoring

The agent also includes a component called the Kubernetes monitor that monitors and reports back application health to Octopus Server.

Cluster-aware tooling

As the agent is running in the cluster, it can retrieve the cluster’s version and correctly use tooling that’s specific to that version. You also need a lot less tooling as there are no longer any requirements for custom authentication plugins. See the agent tooling section for more details.

How the agent works

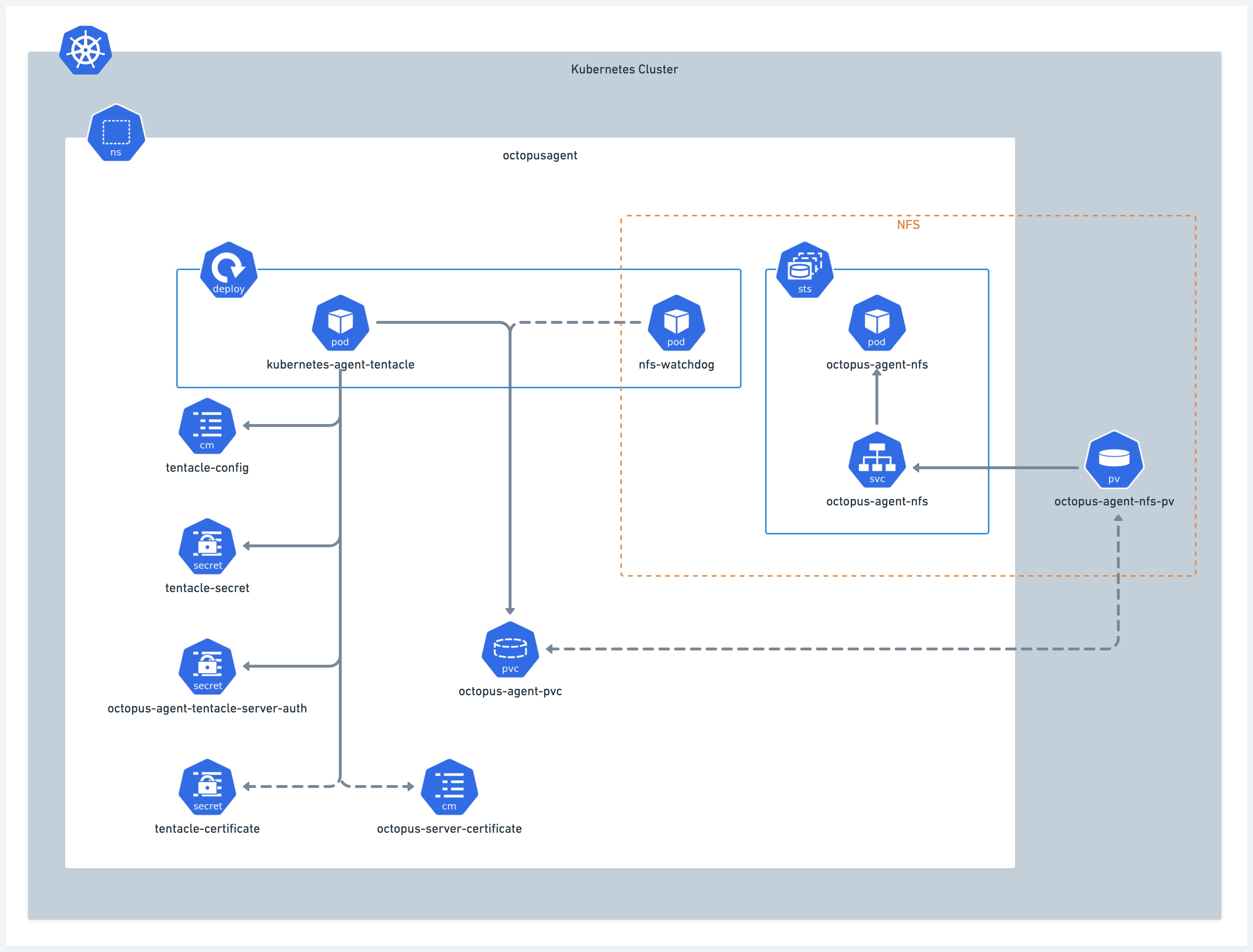

When you install the agent, several resources will be created within a cluster, all running in the same namespace. Please refer to the diagram below (some details such as ServiceAccounts have been omitted).

-

Certain resource names may vary based on the target name and any overrides.

-

The NFS section to the right is created only when a user-defined storageClassName is not included.

-

tentacle-certificateis only created if you provide your own certificate during installation. -

octopus-server-certificateis created when you provide the full chain cert for communicating back to the Octopus Server (e.g. if it is self-signed).

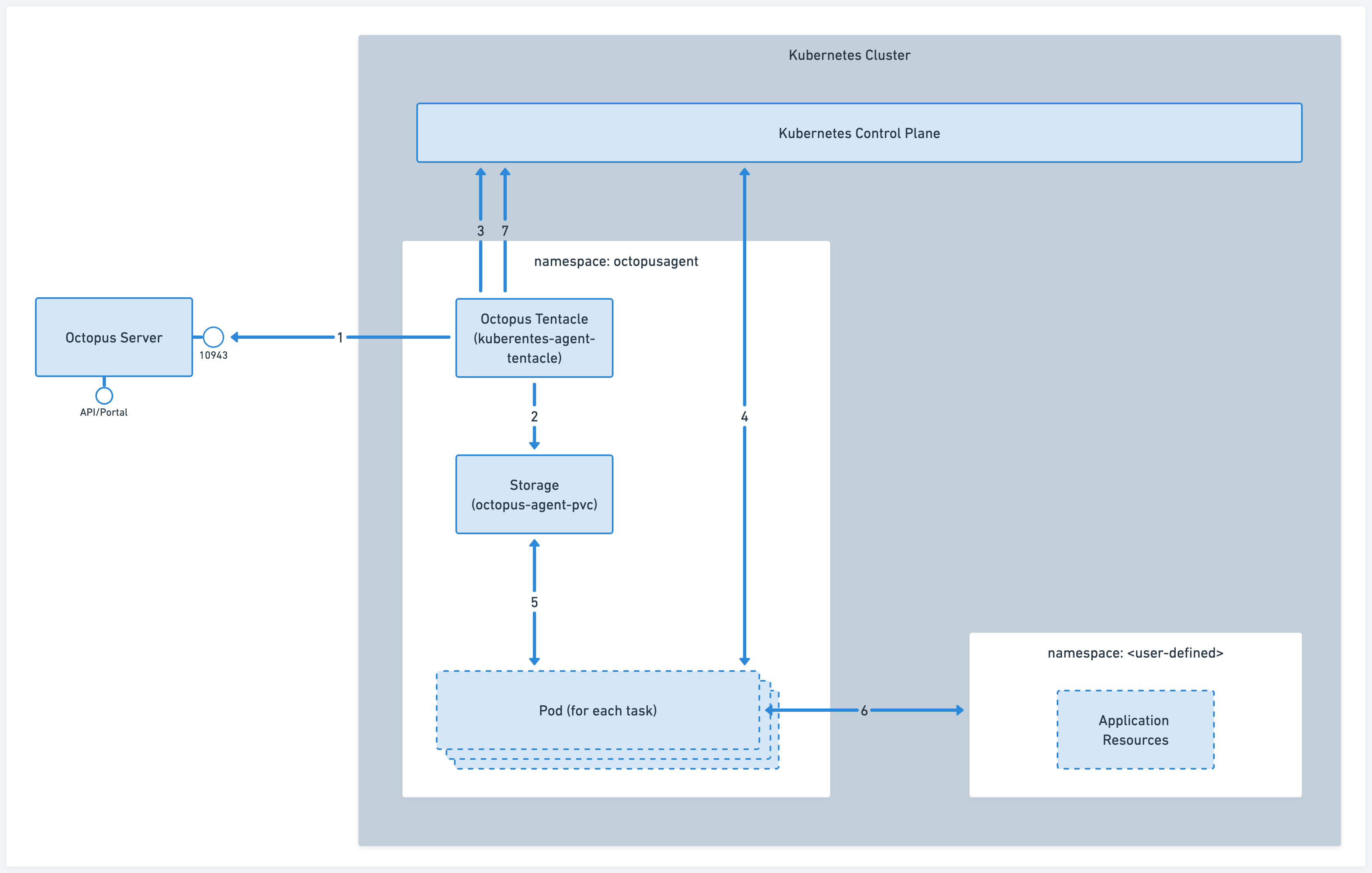

During a deployment, the agent generates temporary pods for each deployment task. These pods are not shown in the diagram above as they are not part of the installation process. Refer to the next diagram to understand how they are created and removed.

-

Octopus Tentacle, which runs inside the

kubernetes-agent-tentaclepod, maintains a connection to the Octopus Server. -

Prior to task execution, various files and tools are transferred from the Octopus Server to a shared storage location. This location will later be accessible by the tasks themselves.

-

Octopus Tentacle creates a new pod to run each individual task, where all user-defined operations will take place.

-

The pod is created. Multiple pods can run simultaneously, to accommodate various tasks within the cluster.

-

The task accesses the shared storage and retrieves any required tools or scripts.

-

The task is executed, and the customer application resources are created.

-

While the task is running, the Octopus Tentacle Pod streams the task pod logs back to the Octopus Server.

Upon completion of the task, the pod will terminate itself.

Requirements

The Kubernetes agent follows semantic versioning, so a major agent version is locked to a Octopus Server version range. Updating to the latest major agent version requires updating to a supported Octopus Server. The supported versions for each agent major version are:

| Kubernetes agent | Octopus Server | Kubernetes cluster |

|---|---|---|

| 1.0.0 - 1.16.1 | 2024.2.6580 or newer | 1.26 to 1.29 |

| 1.17.0 - 1.19.2 | 2024.2.6580 or newer | 1.27 to 1.30 |

| 1.20.0 - 1.21.0 | 2024.2.6580 or newer | 1.28 to 1.31 |

| 1.22.0 - 1.*.* | 2024.2.6580 or newer | 1.29 to 1.32 |

| 2.0.0 - 2.2.1 | 2024.2.9396 or newer | 1.26 to 1.29 |

| 2.3.0 - 2.8.2 | 2024.2.9396 or newer | 1.27 to 1.30 |

| 2.9.0 - 2.11.3 | 2024.2.9396 or newer | 1.28 to 1.31 |

| 2.12.0 - 2.*.* | 2024.2.9396 or newer | 1.29 to 1.32 |

Additionally, the Kubernetes agent only supports Linux AMD64 and Linux ARM64 Kubernetes nodes.

See our support policy for more information.

Installing the Kubernetes agent

The Kubernetes agent is installed using Helm via the octopusdeploy/kubernetes-agent chart.

To simplify this, there is an installation wizard in Octopus to generate the required values.

Helm will use your current kubectl config, so make sure your kubectl config is pointing to the correct cluster before executing the following helm commands. You can see the current kubectl config by executing:

kubectl config viewConfiguration

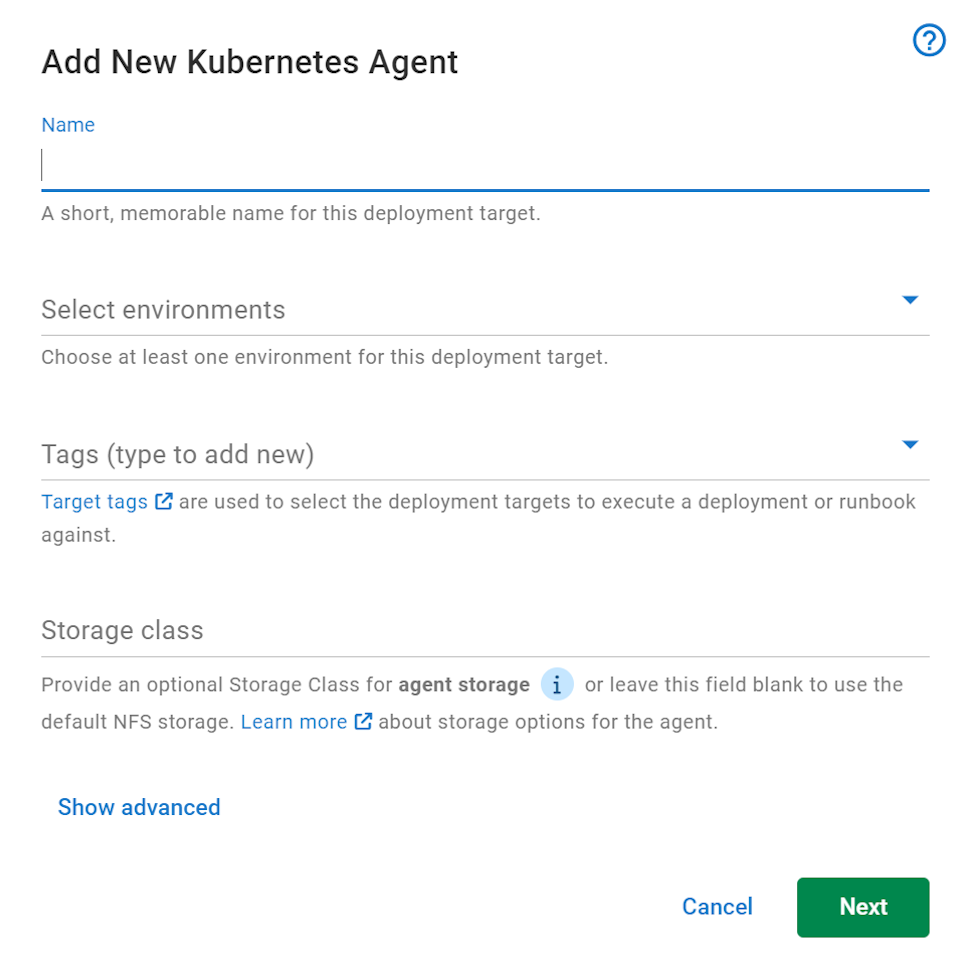

- Navigate to Infrastructure ➜ Deployment Targets, and click Add Deployment Target.

- Select KUBERNETES and click ADD on the Kubernetes Agent card.

- This launches the Add New Kubernetes Agent dialog

- Enter a unique display name for the target. This name is used to generate the Kubernetes namespace, as well as the Helm release name

- Select at least one environment for the target.

- Select at least one target tag for the target.

- Optionally, add the name of an existing Storage Class for the agent to use. The storage class must support the ReadWriteMany access mode.

If no storage class name is added, the default Network File System (NFS) storage will be used.

As the display name is used for the Helm release name, this name must be unique for a given cluster. This means that if you have a Kubernetes agent and Kubernetes worker with the same name (e.g. production), then they will clash during installation.

If you do want a Kubernetes agent and Kubernetes worker to have the same name, Then prepend the type to the name (e.g. worker production and agent production) during installation. This will install them with unique Helm release names, avoiding the clash. After installation, the worker & target names can then be changed in the Octopus Server UI to the desired name to remove the prefix.

Advanced options

You can choose a default Kubernetes namespace that resources are deployed to. This is only used if the step configuration or Kubernetes manifests don’t specify a namespace.

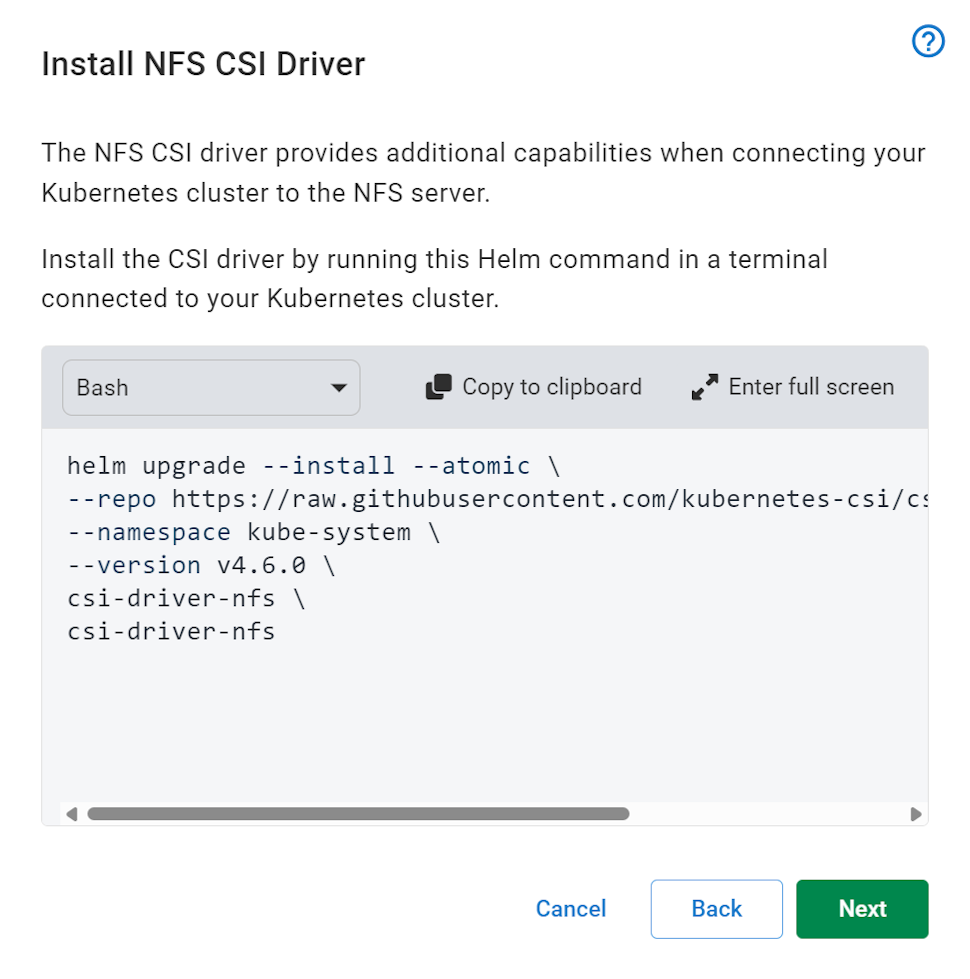

NFS CSI driver

If no Storage Class name is set, the default NFS storage pod will be used. This runs a small NFS pod next to the agent pod and provides shared storage to the agent and script pods.

A requirement of using the NFS pod is the installation of the NFS CSI Driver. This can be achieved by executing the presented helm command in a terminal connected to the target Kubernetes cluster.

If you receive an error with the text failed to download or no cached repo found when attempting to install the NFS CSI driver via helm, try executing the following command and then retrying the install command:

helm repo updateInstallation helm command

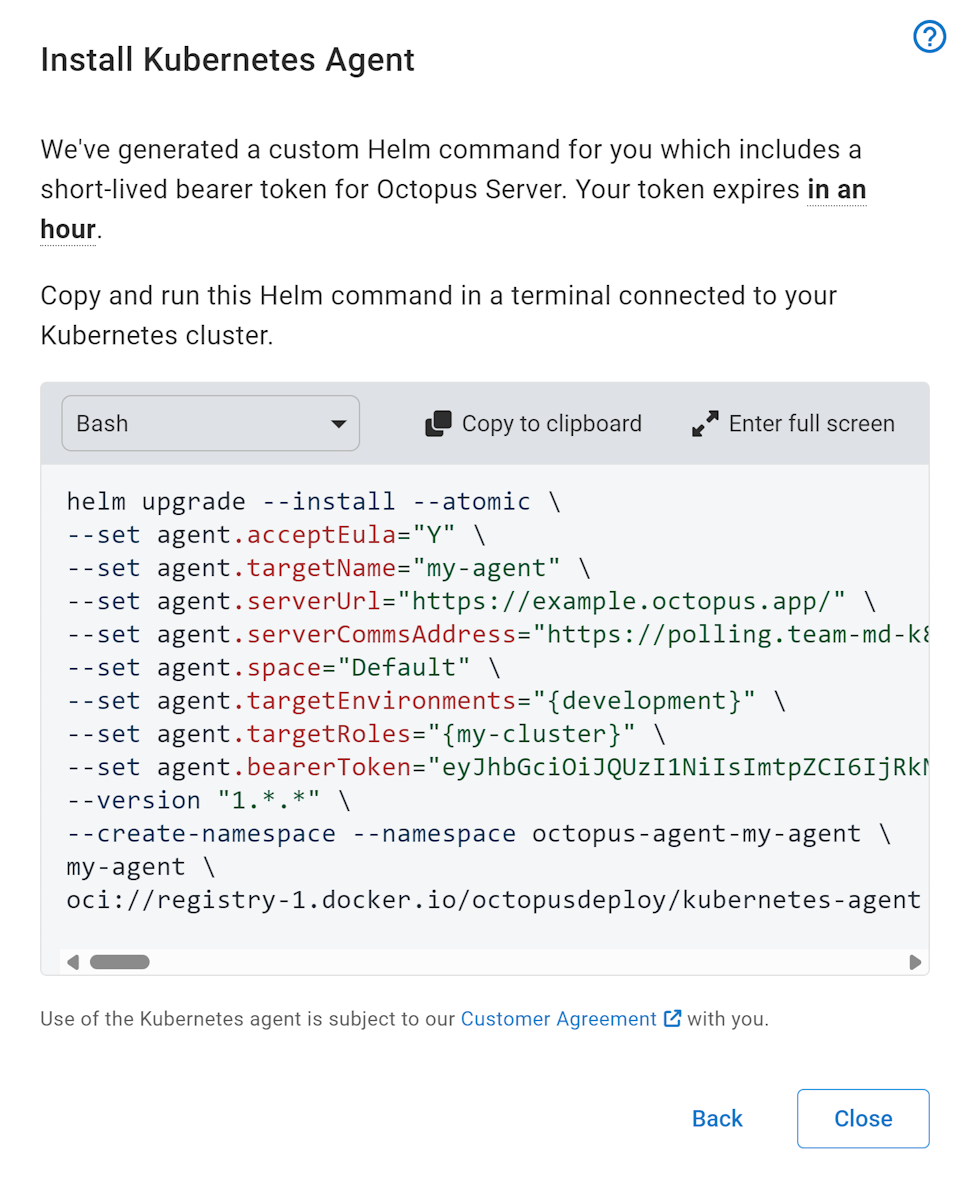

At the end of the wizard, Octopus generates a Helm command that you copy and paste into a terminal connected to the target cluster. After it’s executed, Helm installs all the required resources and starts the agent.

The helm command includes a 1 hour bearer token that is used when the agent first initializes, to register itself with Octopus Server.

The terminal Kubernetes context must have enough permissions to create namespaces and install resources into that namespace. If you wish to install the agent into an existing namespace, remove the --create-namespace flag and change the value after --namespace

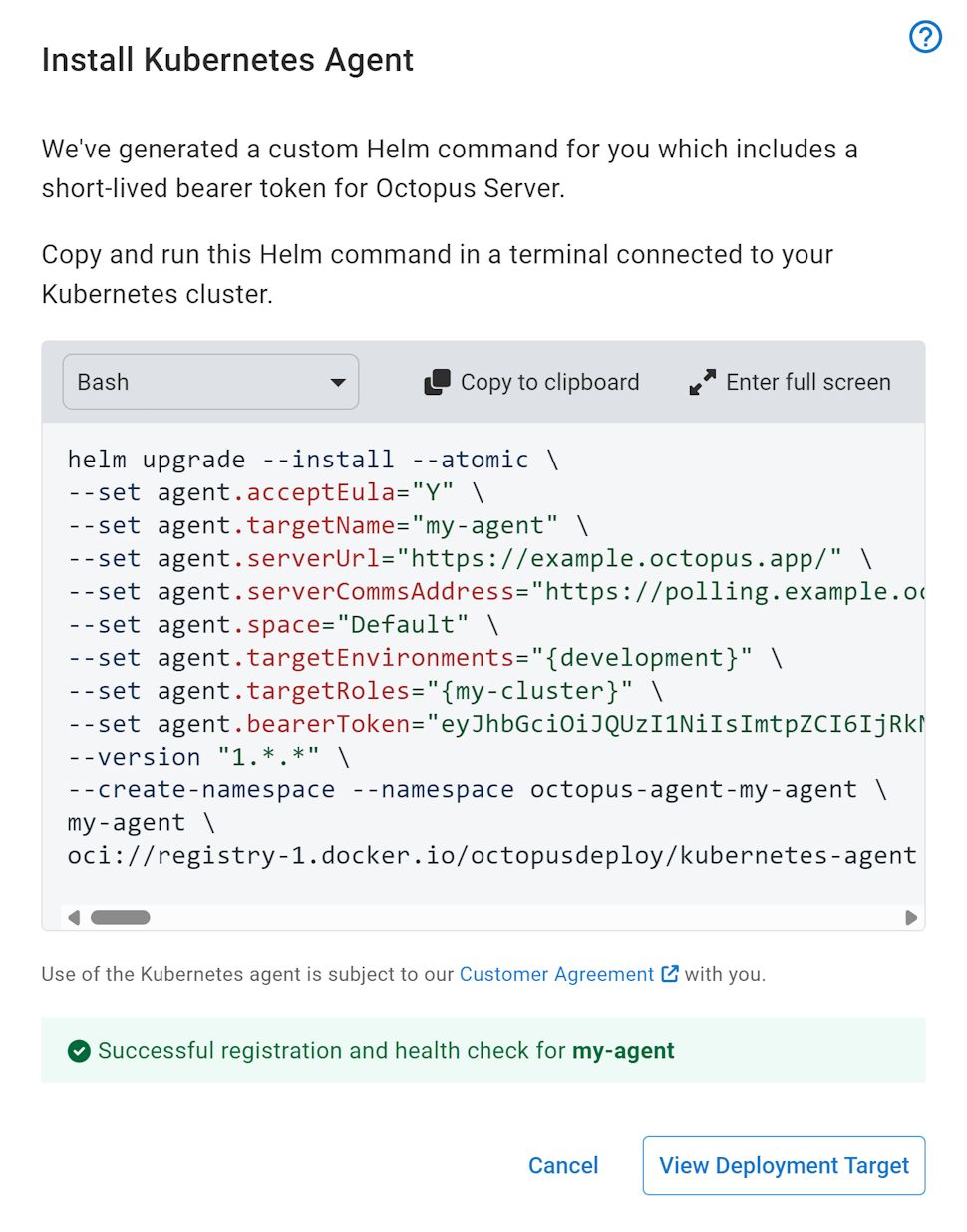

If left open, the installation dialog waits for the agent to establish a connection and run a health check. Once successful, the Kubernetes agent target is ready for use!

A successful health check indicates that deployments can successfully be executed.

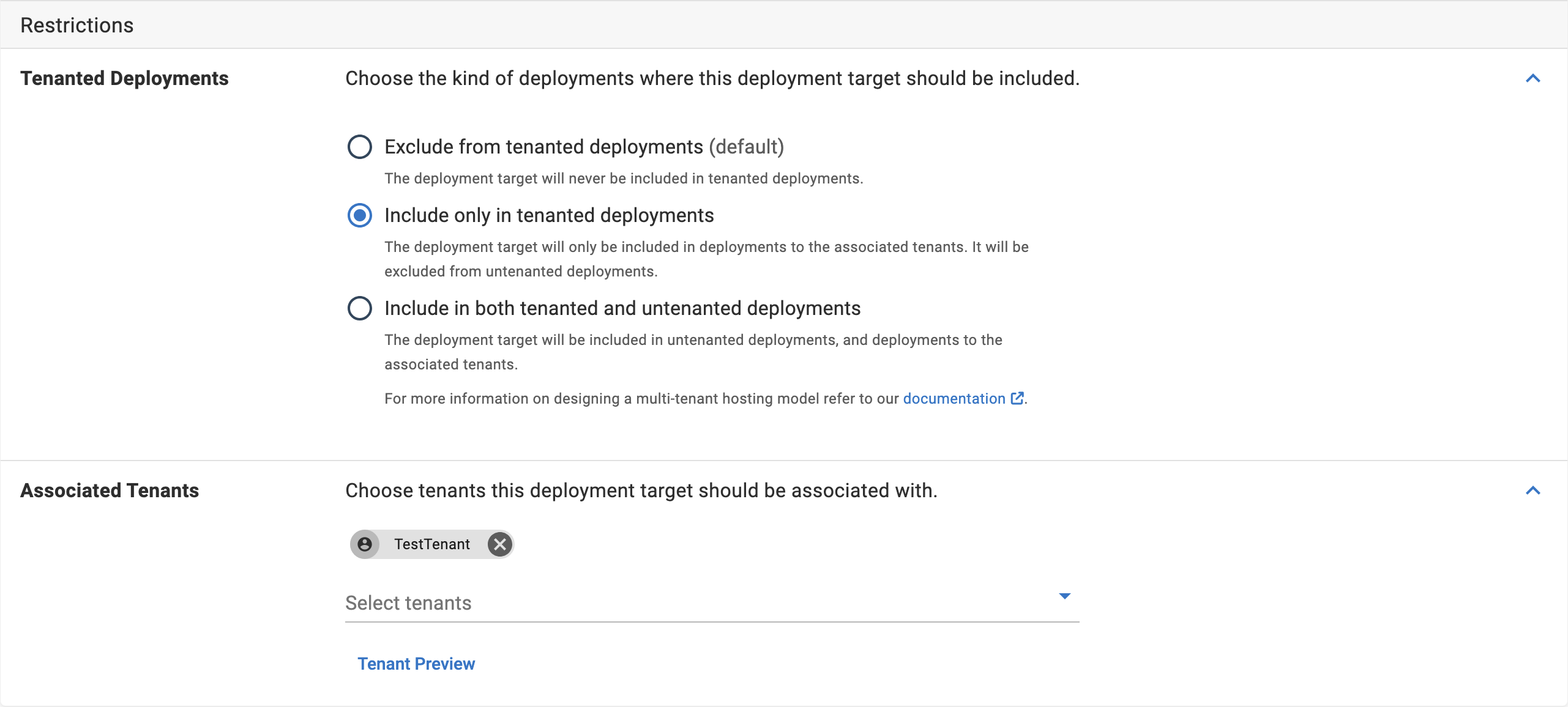

Configuring the agent with Tenants

While the wizard doesn’t support selecting Tenants or Tenant tags, the agent can be configured for tenanted deployments in two ways:

- Use the Deployment Target settings UI at Infrastructure ➜ Deployment Targets ➜ [DEPLOYMENT TARGET] ➜ Settings to add a Tenant and set the Tenanted Deployment Participation as required. This is done after the agent has successfully installed and registered.

- Set additional variables in the helm command to allow the agent to register itself with associated Tenants or Tenant tags. You also need to provider a value for the

TenantedDeploymentParticipationvalue. Possible values areUntenanted(default),Tenanted, andTenantedOrUntenanted.

example to add these values:

--set agent.tenants="{<tenant1>,<tenant2>}" \

--set agent.tenantTags="{<tenantTag1>,<tenantTag2>}" \

--set agent.tenantedDeploymentParticipation="TenantedOrUntenanted" \You don’t need to provide both Tenants and Tenant Tags, but you do need to provider the tenanted deployment participation value.

In a full command:

helm upgrade --install --atomic \

--set agent.acceptEula="Y" \

--set agent.targetName="<name>" \

--set agent.serverUrl="<serverUrl>" \

--set agent.serverCommsAddress="<serverCommsAddress>" \

--set agent.space="Default" \

--set agent.targetEnvironments="{<env1>,<env2>}" \

--set agent.targetRoles="{<targetRole1>,<targetRole2>}" \

--set agent.tenants="{<tenant1>,<tenant2>}" \

--set agent.tenantTags="{<tenantTag1>,<tenantTag2>}" \

--set agent.tenantedDeploymentParticipation="TenantedOrUntenanted" \

--set agent.bearerToken="<bearerToken>" \

--version "1.*.*" \

--create-namespace --namespace <namespace> \

<release-name> \

oci://registry-1.docker.io/octopusdeploy/kubernetes-agentTrusting custom/internal Octopus Server certificates

Server certificate support was added in Kubernetes agent 1.7.0

It is common for organizations to have their Octopus Deploy server hosted in an environment where it has an SSL/TLS certificate that is not part of the global certificate trust chain. As a result, the Kubernetes agent will fail to register with the target server due to certificate errors. A typical error looks like this:

2024-06-21 04:12:01.4189 | ERROR | The following certificate errors were encountered when establishing the HTTPS connection to the server: RemoteCertificateNameMismatch, RemoteCertificateChainErrors

Certificate subject name: CN=octopus.corp.domain

Certificate thumbprint: 42983C1D517D597B74CDF23F054BBC106F4BB32FTo resolve this, you need to provide the Kubernetes agent with a base64-encoded string of the public key of either the self-signed certificate or root organization CA certificate in either .pem or .crt format. When viewed as text, this will look similar to this:

-----BEGIN CERTIFICATE-----

MII...

-----END CERTIFICATE-----Once encoded, this string can be provided as part of the agent installation helm command via the agent.serverCertificate helm value.

To include this in the installation command, add the following to the generated installation command:

--set agent.serverCertificate="<base64-encoded-cert>"Agent tooling

For all Kubernetes steps, except the Run a kubectl script step, the agent uses the octopusdeploy/kubernetes-agent-tools-base default container image to execute it’s workloads. It will correctly select the version of the image that’s specific to the cluster’s version.

For the Run a kubectl script step, if there is a container image defined in the step, then that container image is used. If one is not specified, the default container image is used.

In Octopus Server versions prior to 2024.3.7669, the Kubernetes agent erroneously used container images defined in all Kubernetes steps, not just the Run a kubectl script step.

This image contains the minimum required tooling to run Kubernetes workloads for Octopus Deploy, namely:

kubectlhelmpowershell

Upgrading the Kubernetes agent

The Kubernetes agent can be upgraded automatically by Octopus Server, manually in the Octopus portal or via a helm command.

Automatic updates

Automatic updating was added in 2024.2.8584

By default, the Kubernetes agent is automatically updated by Octopus Server when a new version is released. These version checks typically occur after a health check. When an update is required, Octopus will start a task to update the agent to the latest version.

This behavior is controlled by the Machine Policy associated with the agent. You can change this behavior to Manually in the Machine policy settings.

Manual updating via Octopus portal

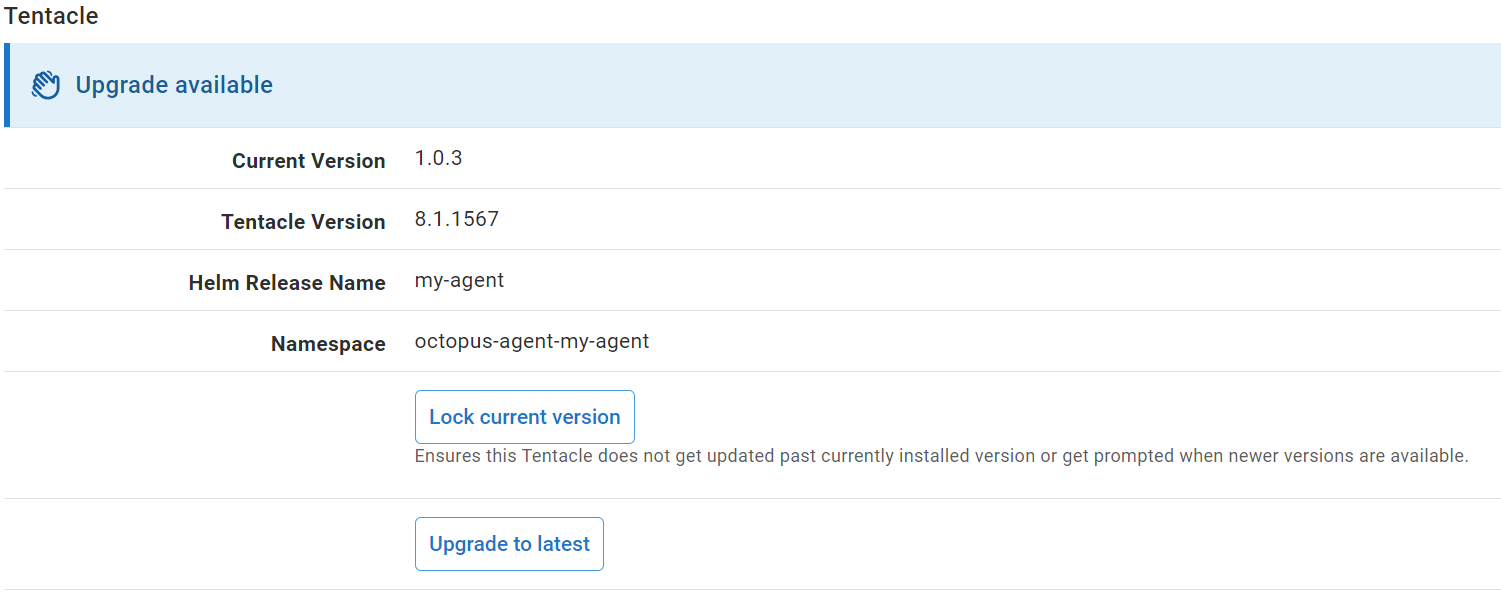

To check if a Kubernetes agent can be manually upgraded, navigate to the Infrastructure ➜ Deployment Targets ➜ [DEPLOYMENT TARGET] ➜ Connectivity page. If the agent can be upgraded, there will be an Upgrade available banner. Clicking Upgrade to latest button will trigger the upgrade via a new task. If the upgrade fails, the previous version of the agent is restored.

Helm upgrade command

To upgrade a Kubernetes agent via helm, note the following fields from the Infrastructure ➜ Deployment Targets ➜ [DEPLOYMENT TARGET] ➜ Connectivity page:

- Helm Release Name

- Namespace

Then, from a terminal connected to the cluster containing the instance, execute the following command:

helm upgrade --atomic --namespace NAMESPACE HELM_RELEASE_NAME oci://registry-1.docker.io/octopusdeploy/kubernetes-agentReplace NAMESPACE and HELM_RELEASE_NAME with the values noted

If after the upgrade command has executed, you find that there is issues with the agent, you can rollback to the previous helm release by executing:

helm rollback --namespace NAMESPACE HELM_RELEASE_NAMEUninstalling the Kubernetes agent

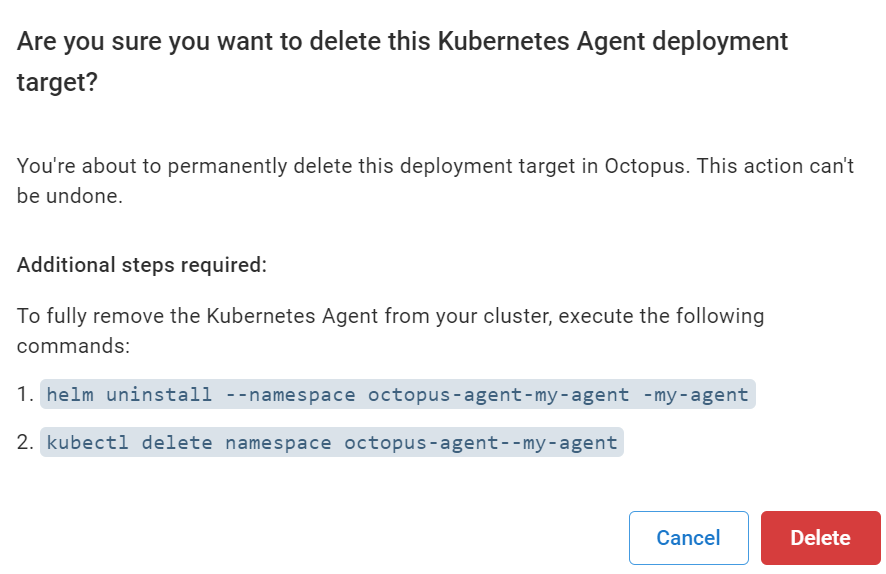

To fully remove the Kubernetes agent, you need to delete the agent from the Kubernetes cluster as well as delete the deployment target from Octopus Deploy

The deployment target deletion confirmation dialog will provide you with the commands to delete the agent from the cluster.Once these have been successfully executed, you can then click Delete and delete the deployment target.

Help us continuously improve

Please let us know if you have any feedback about this page.

Page updated on Friday, March 28, 2025