To distribute traffic to the Octopus web portal on multiple nodes, you need to use a load balancer. Google Cloud provides two options you should consider to distribute HTTP/HTTPS traffic to your Compute Engine instances.

If you are only using Listening Tentacles, we recommend using the HTTP(S) Load Balancer.

However, Polling Tentacles aren’t compatible with the HTTP(S) Load Balancer, so instead, we recommend using the Network Load Balancer. It allows you to configure TCP Forwarding rules on a specific port to each compute engine instance, which is one way to route traffic to each individual node as required for Polling Tentacles when running Octopus High Availability.

To use Network Load Balancers exclusively for Octopus High Availability with Polling Tentacles you’d potentially need to configure multiple load balancer(s) / forwarding rules:

-

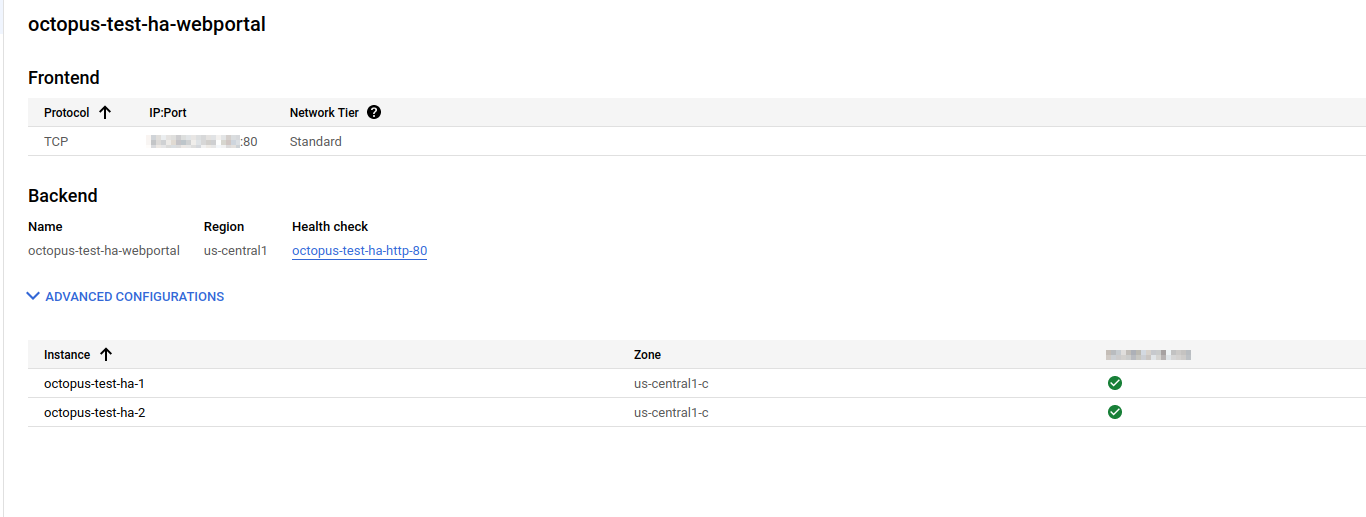

One to serve the Octopus Web Portal HTTP traffic to your backend pool of Compute engine instances:

-

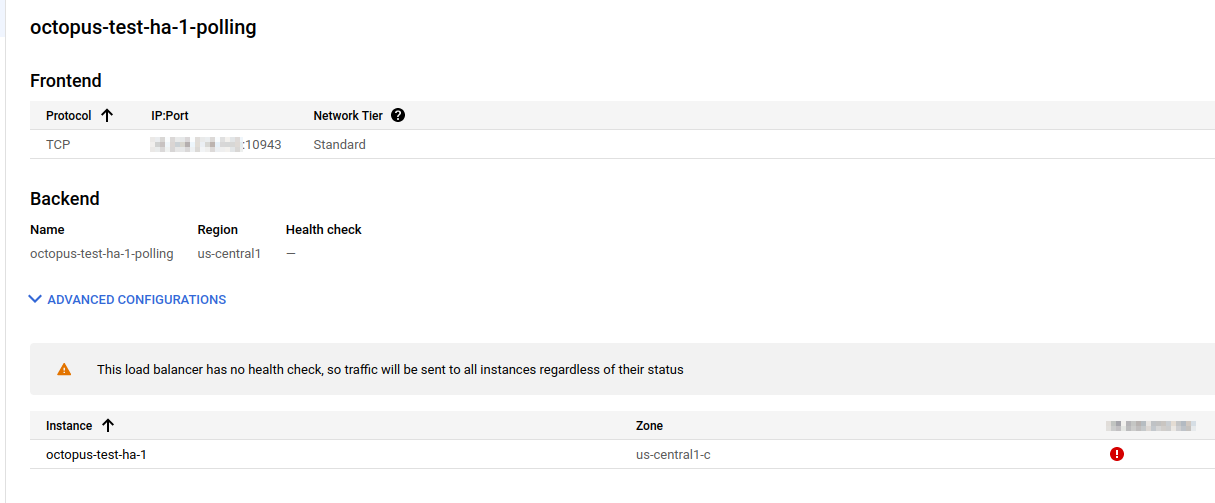

One for each Compute engine instance for Polling Tentacles to connect to:

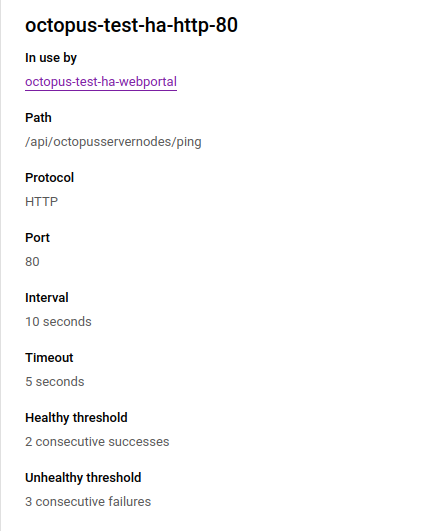

With Network Load Balancers, you can configure a health check to ensure your Compute engine instances are healthy before traffic is served to them:

Help us continuously improve

Please let us know if you have any feedback about this page.

Page updated on Wednesday, May 22, 2024