In the past few months I've spent quite a bit of time with ASP.NET Core 1, and this week I've been taking a deep dive into Windows Server 2016 and Nano Server. One thing is clear: for developers, how we deploy and run applications is going to change quite substantially when all of this makes its way into production.

Background

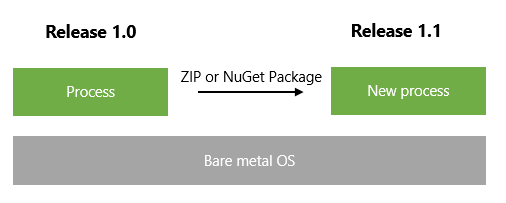

Right now, a tool like Octopus works by taking your application, packaging it into a ZIP, then pushing it to a remote machine. There, it's unzipped, configured, and a new process is launched while an old process is retired. Processes might be:

- An NT Service (we stop the old service, start the new one)

- An IIS website (the old Application Pool worker process dies, a new one is created)

As far as the stack goes, it looks either like this:

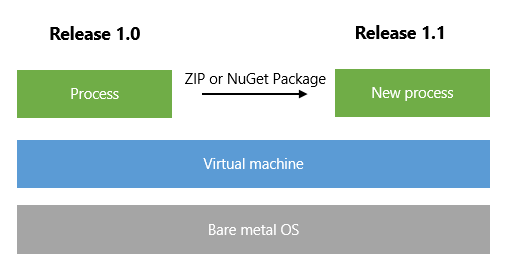

Or perhaps like this:

Whilst you could perhaps create a new virtual machine image for every application release, and deploy a totally new virtual machine and shut the old one down, no one does that today because:

- The VHDX/disk image files are massive, upwards of dozens of GB at least; and,

- It takes forever to boot

(This is why anyone who works with Azure Cloud Services will tell you how much they hate deploying them. Perhaps if Nano Server had been around, Cloud Services would have been huge)

Thinking about it in a different way: at the moment, the only viable "unit of deployment" which changes between releases of your application is the process. Building and deploying a new VM for every application change is nuts (on Windows, that is).

Windows Server 2016: Nano Server and Containers

There are three flavors of Windows Server 2016. The first two we're already familiar with: Server with a GUI, and Server Core. Nothing interesting there.

The third flavor is Nano Server, and it's a game changer. Here's what's interesting about Nano Server:

- It's ridiculously tiny. A full Nano Server VM image, with IIS, PowerShell and .NET Core, is 750mb.

- It boots quickly. Time from "turn on" to "IIS responded to a HTTP request" is a few seconds.

- Memory usage etc. is minimal - around 170mb.

Like a Linux distribution, Nano Server also gives you choices as to how to run it:

- On bare metal

- As a Hyper-V guest machine

- Inside a container

Yes, the other major change in Windows Server 2016 is containers. This is a technology that's been popularized by the likes of Docker on Linux operating systems, and it's coming to Windows.

Many units of application deployment

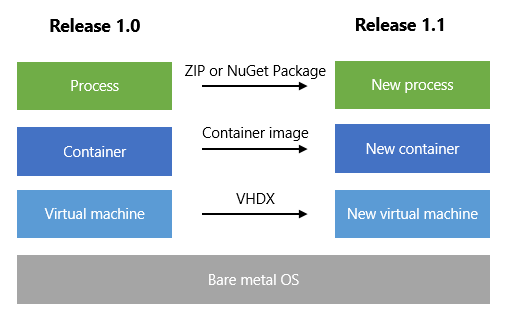

With Nano Server, as you think about releasing and deploying your application in 2016, there are suddenly many options:

Suppose your team is doing two-week sprints, and delivering a new version of the software every two weeks to test, and then eventually production. You now have choices in how you deliver the new software:

- Compile your app and ZIP it (~50mb) - process-level deployment (today)

- Compile your app, put it in a container image (Docker build -> image) - container-level deployment

- Compile your app, and generate a new Nano Server VHDX (~800mb) - VM-level deployment

The tiny size of Nano Server images makes them a very plausible "build artifact" in your continuous delivery pipeline. Why build an MSI or ZIP, when you can build an entire VM image, using roughly the same amount of time and artifact size?

For Octopus, this means we need to begin to operate at each level of this stack. At the moment, when you create a release, you choose the version of NuGet/ZIP packages to include in the release. In the future, this will mean:

- Choosing the NuGet/ZIP packages that make up this release: Octopus deploys to an existing, running target

- Choosing the Docker/container images that make up this release: Octopus deploys to a Docker/container host

- Choosing the VHDX's that make up this release: Octopus deploys to a Hyper-V host

Trade offs, and how to choose

The big benefit of these changes is isolation and security: Microsoft are all about Azure right now, so the investment in being able to run tiny, fully isolated OS images makes a ton of sense.

Isolation is also a benefit in the enterprise. How many times have you needed to deploy a new version of an application, or wanted to deploy a new version of say the .NET runtime, but been told you can't because it might break some other application on the system.

On the other hand, container images and VHDX's make deploy-time configuration a little more clunky. In an ideal world, we'd use the same container image/VHDX in our Test as well as Production environments. But Test and Production might use different database connection strings.

- With process-level deployment, it's as easy as modifying a configuration file after unzipping

- With container-level deployment, you can feed some variables/connectors into the container, but that isn't as flexible

- With VHDX based deployment, you either build a different VHDX per environment, or boot the VM and configure it first

As another example, perhaps your application allows different add-on packages to be enabled per deployment. Again, it's going to be easier to "compose" these applications at runtime when deploying processes, than trying to compose them in a VHDX if that's the unit of deployment.

Regardless of how you deliver new builds of your application - as ZIPs, as Docker images, as VHDXs or bootable USB thumb drives - Nano Server is an option. And it's a pretty compelling one.

.NET Core and Nano Server are the future

Gazing into my crystal ball, I will make two bold predictions:

- The full .NET Framework is now legacy; 5 years from now, most greenfield C#/.NET applications will target .NET Core

- Windows Server with a GUI and Server Core are now legacy; most greenfield environments will be built on Nano Server

Think about it this way: anything really important for server apps has either been ported to .NET Core, or will over the next few years. If your app can target .NET Core, then it should. There's no reason to benefit to limiting yourself to the full .NET Framework, and the only reasons that would tie you to the full .NET Framework will diminish over time as those technologies die.

And if your app targets .NET Core, then it should be able to run on Nano Server. Why keep all the overhead of Server with a GUI or Server Core if you can run on tiny, fast, small surface area Nano Server?

That said, I'm sure the "full flavors" of Windows Server will continue to exist for a long time, mostly for the servers that are treated as "pets" rather than "cattle". Examples will be domain controllers, SQL Server hosts, etc. - but it will be a small niche. For the bulk of servers that scale horizontally (app and web servers), Nano Server is the future.